How AI Tools Are Trained From Data to Deployment

Artificial intelligence is far and wide — from prophetic textbook on your phone to sophisticated chatbots that handle client queries. Yet numerous marketers and tech suckers still ask, “ How AI tools are trained? ” Understanding the trip from raw data to a completely stationed model demystifies AI and shows why data quality, mortal moxie, and iterative testing matter so much.

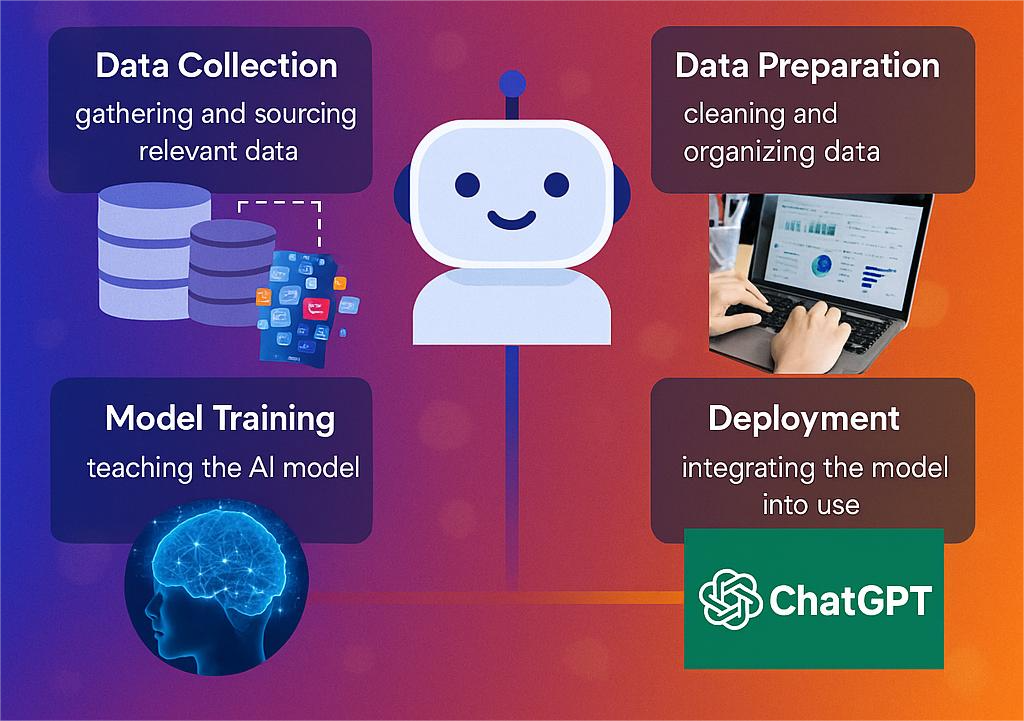

This in ‑ depth companion walks through each stage data collection, cleaning, model selection, training, evaluation, and deployment — to give you a clear, non ‑ specialized overview of the entire AI channel.

1️ Data Collection Chancing the Right Energy

AI systems are only as good as the information fed into them. Common data sources include:

- Public datasets ( e.g., Kaggle, UCI Machine Learning Repository)

- Company databases ( CRM, dispatch, web analytics)

- Stoner ‑ generated data ( social media posts, product reviews)

- Third ‑ party providers ( paid data APIs)

Tip: Collect data that directly answers your problem statement — quality beats volume every time.

2️ Data drawing & Labeling Turning Noise into Knowledge

Raw data is messy. Duplicate entries, missing values, and inconsistent formats can ail a model. crucial way include:

- Deduplication & normalization to maintain thickness

- Removing outliers that dispose prognostications

- Labeling samples( essential for supervised literacy)

Numerous brigades use tools like Marker Studio or Amazon SageMaker Ground Truth for scalable reflection.

3️ Choosing a Model Architecture

The armature determines how your AI “ thinks. ” Popular choices include:

|

Task |

Common Models |

|

Text Generation |

Mills( e.g., GPT) |

|

Image Classification |

Convolutional Neural Networks (CNNs) |

|

Tabular Data |

Gradient‑Boosted Trees |

Still, check out our earlier post What Is AI? A freshman’s companion, If you’d like a lesson on motor tech.

4️ Training the Model

Now the heavy lifting thresholds. masterminds feed clean, labeled data into the chosen armature and reiterate until the model converges on accurate prognostications. Standard practices:

- Batch processing to manage memory efficiently

- Literacy ‑ rate scheduling to avoid over ‑ or under ‑ befitting

- Early stopping when performance stops perfecting

Pall platforms similar as Google Vertex AI and Azure ML automate much of this workflow.

5️ Evaluation & Confirmation

Training delicacy is pointless without confirmation. brigades resolve data into training, confirmation, and testing sets. crucial criteria

- Accuracy/ Precision/ Recall ( for bracket)

- RMSE/ MAE ( for retrogression)

- BLEU/ Cream ( for language models)

Cross ‑ confirmation ensures the model generalizes to unseen data.However, it’s back to point engineering or collecting further representative data, If scores pause.

6️ Deployment Shipping the Model to Production

Deployment islands the gap between law and guests. Typical paths

- Peaceful APIs for real ‑ time prognostications

- Batch conclusion jobs for nocturnal or daily updates

- Edge deployment for low ‑ quiescence use cases( IoT, mobile)

MLOps tools like MLflow and Seldon Core manage versioning, monitoring, and rollback.

7️ Monitoring, Feedback & RReplication

Models degrade as stoner geste shifts — a conception called model drift. Smart brigades

- Track vaticination delicacy in product

- Collect new data for periodic retraining

- A/ B test streamlined models before full rollout

Flash back, How AI tools are trained is an ongoing process, not a one ‑ time event.

How AI Tools Are Trained: Practical Applications and Case Study

OpenAI’s ChatGPT relies on underpinning learning from mortal feedback( RLHF). After pre ‑ training on vast datasets, mortal pundits rank labors. The model also fine ‑ melodies itself to align with mortal preferences — showing that indeed cutting ‑ edge AI hinges on mortal sapience.

Want to see how AI impacts social platforms? Read our post on How AI Is Changing Social Media Marketing.

“Want to see how AI impacts social platforms? Read our post on How AI Is Changing Social Media Marketing.”

Stylish Practices Checklist

✅ Start with a clear business problem

✅ Collect different, representative data

✅ Document data lineage for translucency

✅ Automate channels with MLOps tools

✅ Keep a mortal ‑ in ‑ the ‑ circle for ethics and quality

Conclusion

Understanding how AI tools are trained helps clarify the technology and underscores the value of high ‑ quality data, thoughtful model choices, and nonstop monitoring. When done right, data ‑ to ‑ deployment workflows turn raw information into intelligent results that gauge.